Introduction

By Prasanna Selvaraj

A single Tweet contains over 70 metadata attributes, such as user information, hashtags, public metrics (follower, reply, retweet counts), annotations and more. Tweet metadata, along with the Tweet text, contains valuable insights for a developer or business looking to better understand the public conversation. Twitter Enterprise APIs help narrow down to a subset of Tweets using powerful filters and operators. This subset is usually a large set of Tweets, and a lot of filtering is required with the Tweet metadata to get through the conversation to find the most relevant and impactful Tweets.

What is this for?

This guide gives a high-level overview on how to ingest Tweets at scale, and “slice and dice” those Tweets via metadata to narrow them down to a specific category, or sub-categories. The platform used to ingest and process the Tweets in this tutorial is based on the Google Cloud Platform (GCP) and Twitter Enterprise APIs.

This guide is not a step-by-step tutorial. Rather, it provides a high-level architectural view of how cloud-based systems can be implemented to ingest large volumes of Twitter data (like millions of Tweets) and derive insights.

What to expect?

Intermediate and advanced Cloud developers

Familiarity with GCP, AWS, Azure or similar Platforms; this guide specifically refers to GCP, but the principles may be transferable

Familiarity with a programming language, message queuing systems, cloud databases and visualization and analytics tools like DataStudio / PowerBI / Tableau. Ideal for full-stack developers

What do you get?

An architectural overview of implementing cloud-based systems to ingest large volumes of Twitter data (up to 500M Tweets)

A web application to search Tweets on a Twitter handle or a search query using Twitter’s Full Archive Search API [Sample code - Git Repo]

Sample middleware data (Tweet) loader and UI code based on NodeJS and React. [Sample code - Git Repo]

Database schema based on Tweet payload for Google BigQuery [JSON Schema]

Samples of visualization components (Google DataStudio) to “slice and dice” the Tweets based on Tweet metadata (Annotations, Public Metrics, Hashtags and natural language analysis reports)

Endpoints

Other tools

Architecture: How to ingest large volumes of Twitter data leveraging GCP

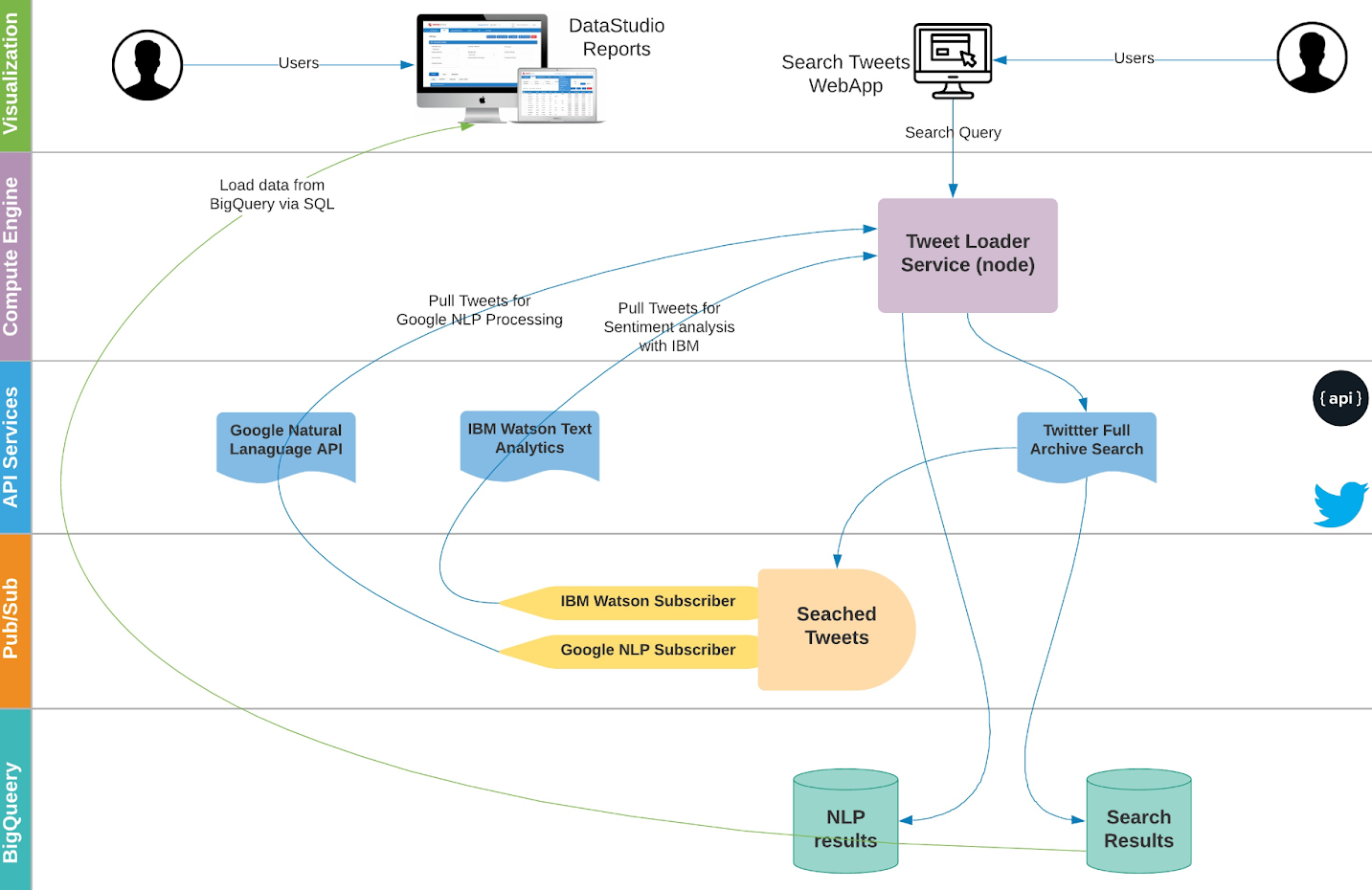

By following the path outlined in the diagram above, developers will be able to search a Twitter handle or Twitter Search Query (for example, “from:USBank” or “\”US Bank\””) via a web application. The resulting Tweets from this search will become available for additional filtering via an analytics layer using Google DataStudio. The search query from the user is passed to a middleware component hosted on the Google app engine which pushes the search query (Twitter handle) to the Twitter Full Archive Search API. The results from Full- Archive Search are persisted in Google BigQuery.

To demonstrate how to further enrich the data, Tweets are also published to a message topic for additional analysis with natural language processing (NLP) services, like Google NLP and IBM Watson. The Google app engine middleware based on Node will listen to the NLP subscribers (Google and IBM Watson) for Tweets, and the resulting annotations will be persisted in the NLP results BigQuery table.

This is an elastic architecture which can scale to large volumes of Tweets (hundreds of millions). The elasticity is made possible by BigQuery which is a serverless petabyte scale data warehouse and Cloud PubSub which acts as a shock-absorber to ingest large volumes of Tweets from Twitter Full Archive Search API. The middleware process which is responsible for translating the Tweet payload into BigQuery schema is hosted on AppEngine which is a managed serverless compute platform that can scale horizontally with the increase of load.

The raw ingredient (data) is the Tweet itself, which is served by the enterprise Full-Archive Search API.

Search Tweets web application

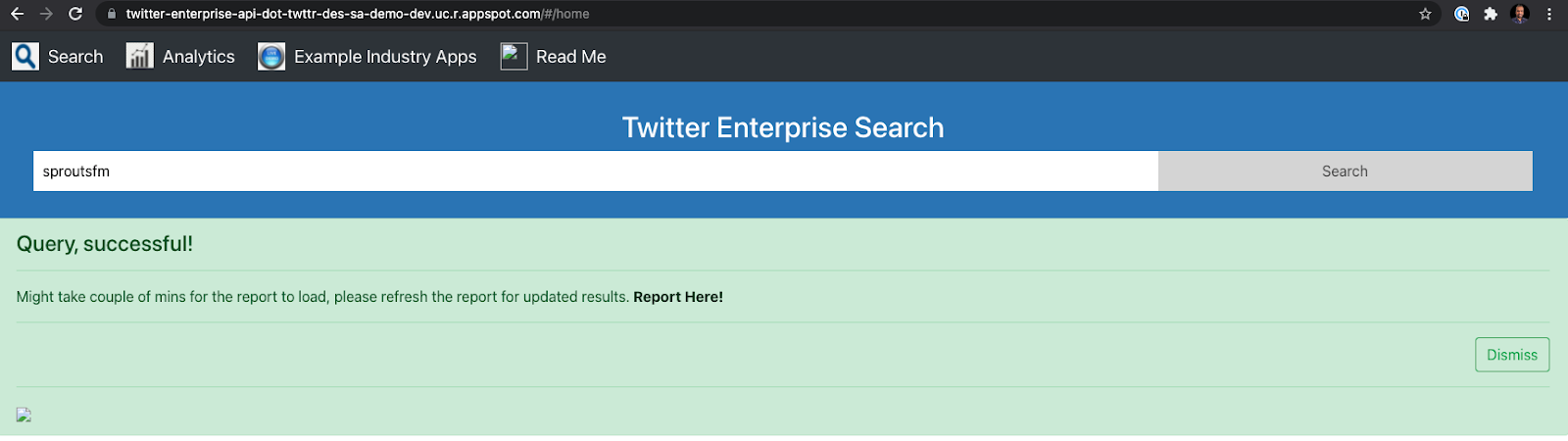

The search web application is the user interface where users can pass the search query or a Twitter handle as an input. The Datastudio report link will be generated as output where the users can view the analytics. The search web application is a React-based application. This web application is integrated with the middleware node component. Please refer to the code repo here and deployment instructions.

The search.js react component integrates with the middleware node component for the Twitter Full-Archive Search API.

Tweet loader middleware

The search query from the UI is passed to a middleware (node app) which translates the query and sends a POST request to the Twitter Full-Archive Search API. The middleware is responsible for persisting the search results in a Google BigQuery dataset. Also, the middleware is an open framework which leverages pub/sub event loop for asynchronous compute processing to leverage external natural language processing services and to hook custom machine learning models. Please refer to the GitHub code repo.

This service within the Tweet loader middleware is responsible for receiving the search query from UI and persisting into BigQuery. The messaging infrastructure (topics and subscriptions) required for natural language processing are provisioned before the search function is invoked.

Sample code: Search and store Tweets

async function fullArchiveSearch(reqBody, nextToken) {

// validate requestBody before Search

var nlpSwitch = reqBody.naturalLanguage.on;

let mlSwitch = reqBody.machineLearning.on;

var fas = reqBody.fullArchiveSearch;

var query = { "query": fas.query, "maxResults": 500, fromDate: fas.fromDate, toDate: fas.toDate }

if (nextToken != undefined && nextToken != null)

query.next = nextToken;

return new Promise(function (resolve, reject) {

let axiosConfig = {

method: 'post',

url: config.fas_search_url,

auth: {

username: config.gnip_username,

password: config.gnip_password

},

data: query

};

console.log('query ', JSON.stringify(query));

axios(axiosConfig)

.then(function (resp) {

if (resp != null) {

console.log('Search results into BQ and Publish into Topics');

if (resp.data != null && resp.data.results != null && resp.data.results.length > 0) {

fas_svcs.insertResults(resp.data.results, reqBody);

// publish to topic

if (nlpSwitch === true || mlSwitch === true) {

pub_sub.publishTweets(resp.data.results, fas.category, reqBody.topicName);

console.log('Tweets published to topic ',reqBody.topicName);

}

}

if (resp.data != undefined && resp.data.next != undefined) {

fullArchiveSearch(reqBody, resp.data.next);

} else {

// no next token - end of FAS insert followers

if (reqBody.followers.followers_graph === true) {

fas_svcs.queryBQTable(utils.getEngagementsSQL(reqBody)).then((rows) => {

getFollowers(rows, reqBody);

});

}

}

resolve({ "message": "Query result persisted" });

}

})

.catch(function (error) {

console.log('ERROR --- ', error);

resolve(error);

});

});

}

Sample code: Provision database and messaging infrastructure

async function setupMsgInfra(requestBody) {

var nlpObj = requestBody.naturalLanguage;

let mlObj = requestBody.machineLearning;

let mlSwitch = mlObj.on;

var category = requestBody.fullArchiveSearch.category;

var nlpSwitch = nlpObj.on;

var dataSetName = requestBody.dataSet.dataSetName;

return new Promise(function (resolve, reject) {

if (nlpSwitch === false && mlSwitch === false) {

resolve('NLP, ML setup skipped');

return;

}

let topicName = config.nlp_topic + '_' + dataSetName + '_' + category;

let subscriptionName = topicName + '_' + 'subscription';

createTopic(topicName).then(() => {

console.log('Topic created ', topicName);

requestBody.naturalLanguage.topicName = topicName;

requestBody.machineLearning.topicName = topicName;

if (nlpObj.google.googleSvc === true && nlpSwitch === true) {

createSubscription(topicName, subscriptionName).then(() => {

console.log('Subscription created ', subscriptionName);

requestBody.naturalLanguage.google.subscriptionName = subscriptionName;

listenForMessages(requestBody, 'GCP');

resolve(topicName);

});

} if (nlpObj.watson.watsonSvc === true && nlpSwitch === true) {

let watsonSubscriptionName = topicName + '_' + 'watson_subs'

createSubscription(topicName, watsonSubscriptionName).then(() => {

console.log('Subscription created ', watsonSubscriptionName);

requestBody.naturalLanguage.watson.subscriptionName = watsonSubscriptionName;

listenForMessages(requestBody, 'WATSON');

resolve(topicName);

});

}

if (mlObj.cxm.vertexSvc === true) {

let cxmSubscriptionName = topicName + '_' + 'cxm_subs'

createSubscription(topicName, cxmSubscriptionName).then(() => {

console.log('Subscription created ', cxmSubscriptionName);

requestBody.machineLearning.cxm.subscriptionName = cxmSubscriptionName;

listenForMessages(requestBody, 'CXM');

resolve(topicName);

});

}

resolve(topicName);

});

})

}

The Tweets are persisted into BigQuery and published into topics in parallel. Asynchronous NLP services are responsible for listening to the subscriptions, processing, and annotating messages with natural language analysis from IBM Watson and Google Natural Language services.

The Tweet payload from Twitter Full-Archive Search API is persisted into BigQuery as a flat table structure enabling for easier “slicing and dicing” of Tweet payload for analytics. BigQuery enables a complex nested JSON structure map directly to its underlying database schema. For instance, JSON records and arrays are unnested and converted into a single tuple within BigQuery. This not only makes data translation easier, however, but also makes SQL querying much simpler and follows the same convention as the standard SQL. This function breaks down the Tweet payload into BigQuery row and ingests Tweets in a batch processing mechanism (500 Tweets in a single insert operation, 500 is the maximum rows allowed by BigQuery in a single API request).

Database and schema

The search results are persisted in a Google BigQuery dataset which directly feeds to the visualization layer,DataStudio. Google BigQuery is a key component, as it not only feeds data to analytics, but also expands the JSON payload to a flat table structure. JSON arrays are translated to row tuples in BigQuery. Here’s the BigQuery table schema for Tweet payload.

This is an example Tweet payload, that you could surface from BigQuery using SQL:

{

"text": "Anyone else in the same situation with their @Walmart #PS5 order from the 3/18 drop? I got a shipping notice and tr… https://t.co/yOLbh3WpqZ",

"id": "1382013470464442400",

"entities": {

"hashtags": [

{

"text": "PS5",

"indices": [

"54",

"58"

]

}

],

"urls": [],

"user_mentions": [

{

"id": "17137891",

"id_str": "17137891",

"name": "Walmart",

"screen_name": "Walmart",

"indices": [

"45",

"53"

]

}

],

"symbols": [],

"polls": [],

"media": [],

"annotations": {

"context": [

{

"context_domain_id": "79",

"context_domain_id_str": "79",

"context_domain_name": "Video Game Hardware",

"context_domain_description": "Video Game Hardware",

"context_entity_id": "1201630019065274400",

"context_entity_id_str": "1201630019065274369",

"context_entity_name": "PlayStation 5",

"context_entity_description": null

},

{

"context_domain_id": "45",

"context_domain_id_str": "45",

"context_domain_name": "Brand Vertical",

"context_domain_description": "Top level entities that describe a Brands industry",

"context_entity_id": "781974596706635800",

"context_entity_id_str": "781974596706635776",

"context_entity_name": "Retail",

"context_entity_description": null

},

{

"context_domain_id": "45",

"context_domain_id_str": "45",

"context_domain_name": "Brand Vertical",

"context_domain_description": "Top level entities that describe a Brands industry",

"context_entity_id": "781974597310615600",

"context_entity_id_str": "781974597310615553",

"context_entity_name": "Entertainment",

"context_entity_description": null

},

{

"context_domain_id": "46",

"context_domain_id_str": "46",

"context_domain_name": "Brand Category",

"context_domain_description": "Categories within Brand Verticals that narrow down the scope of Brands",

"context_entity_id": "781974597218340900",

"context_entity_id_str": "781974597218340864",

"context_entity_name": "Video Games",

"context_entity_description": null

},

{

"context_domain_id": "46",

"context_domain_id_str": "46",

"context_domain_name": "Brand Category",

"context_domain_description": "Categories within Brand Verticals that narrow down the scope of Brands",

"context_entity_id": "781974597570732000",

"context_entity_id_str": "781974597570732032",

"context_entity_name": "Multi-Purpose Department",

"context_entity_description": null

},

{

"context_domain_id": "47",

"context_domain_id_str": "47",

"context_domain_name": "Brand",

"context_domain_description": "Brands and Companies",

"context_entity_id": "10026347212",

"context_entity_id_str": "10026347212",

"context_entity_name": "Walmart",

"context_entity_description": null

},

{

"context_domain_id": "47",

"context_domain_id_str": "47",

"context_domain_name": "Brand",

"context_domain_description": "Brands and Companies",

"context_entity_id": "10027048853",

"context_entity_id_str": "10027048853",

"context_entity_name": "PlayStation",

"context_entity_description": null

},

{

"context_domain_id": "79",

"context_domain_id_str": "79",

"context_domain_name": "Video Game Hardware",

"context_domain_description": "Video Game Hardware",

"context_entity_id": "10027048853",

"context_entity_id_str": "10027048853",

"context_entity_name": "PlayStation",

"context_entity_description": null

}

],

"entity": [

{

"char_start_index": "172",

"char_end_index": "173",

"probability": "0.9051",

"type": "Place",

"normalized_text": "CA"

},

{

"char_start_index": "178",

"char_end_index": "179",

"probability": "0.9572",

"type": "Place",

"normalized_text": "TX"

}

]

}

}

}

Visualization and analytics: slice and dice with Tweet metadata

The visualization and analytics powered by DataStudio empowers the user to derive insights from the Tweet data persisted in the BigQuery database. Google DataStudio provides the visualizations in the form of pluggable charts which can be hooked up to a BigQuery data source. Below are a few examples of visualizations created with DataStudio with Twitter data.

Filter Tweets with Tweet annotations

This report overlays the Tweets with context and entity annotations (which are first-party Tweet annotations, delivered as part of the Tweet payload returned by a query). Tweets can be analyzed by choosing an annotation or any of the sub-categories. This type of filtering will result in fewer Tweets specific to the context annotation category selected. An example context annotation report below:

Filter Tweets with hashtags and mentions

The hashtags and mentions in the Tweets are surfaced as filters that can be used to narrow down to a subset of Tweets.

Filter Tweets with public metrics

The Enterprise Full-Archive Search API payload has four metrics that correspond to public engagement metrics: Likes, Replies, Retweets and Quote Tweet counts.This report provides a mechanism to filter Tweets based on the public metrics. The report can be drilled down by day,month, or year to filter Tweets.

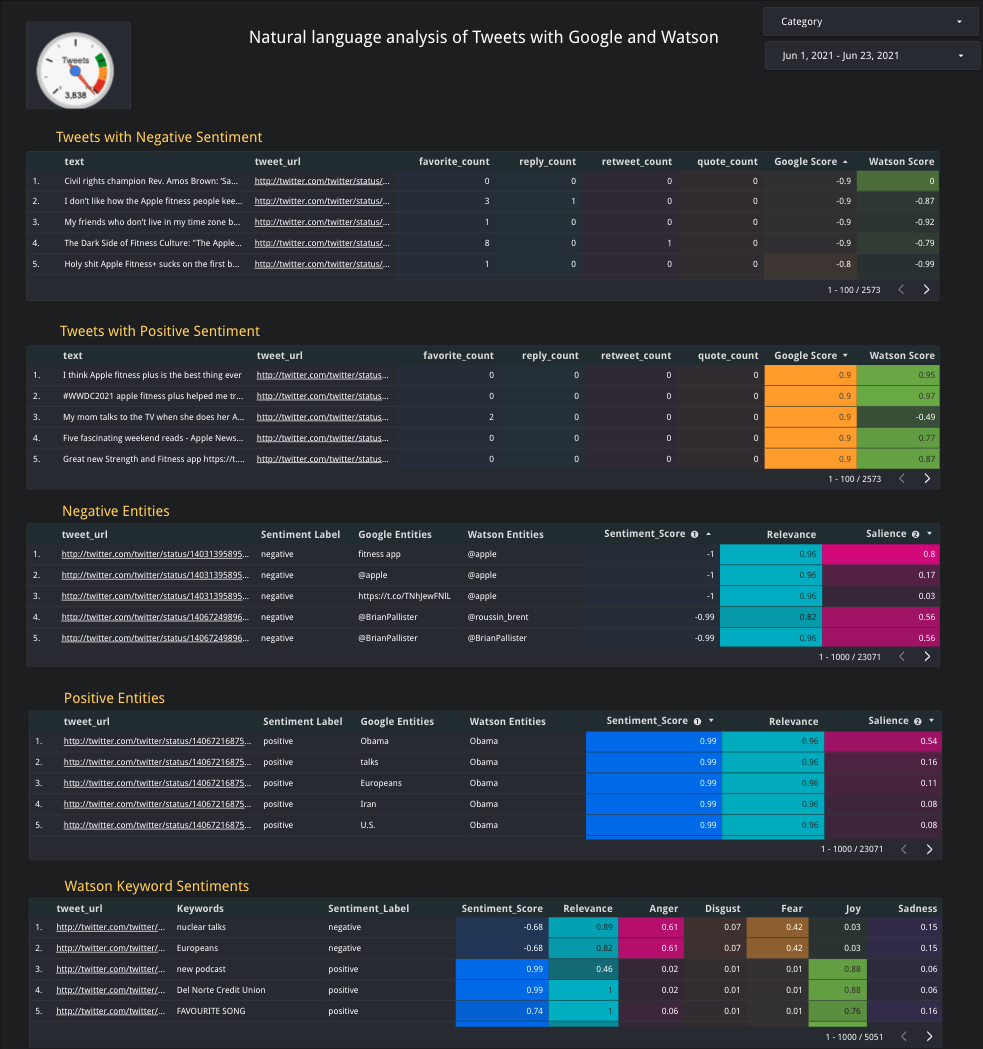

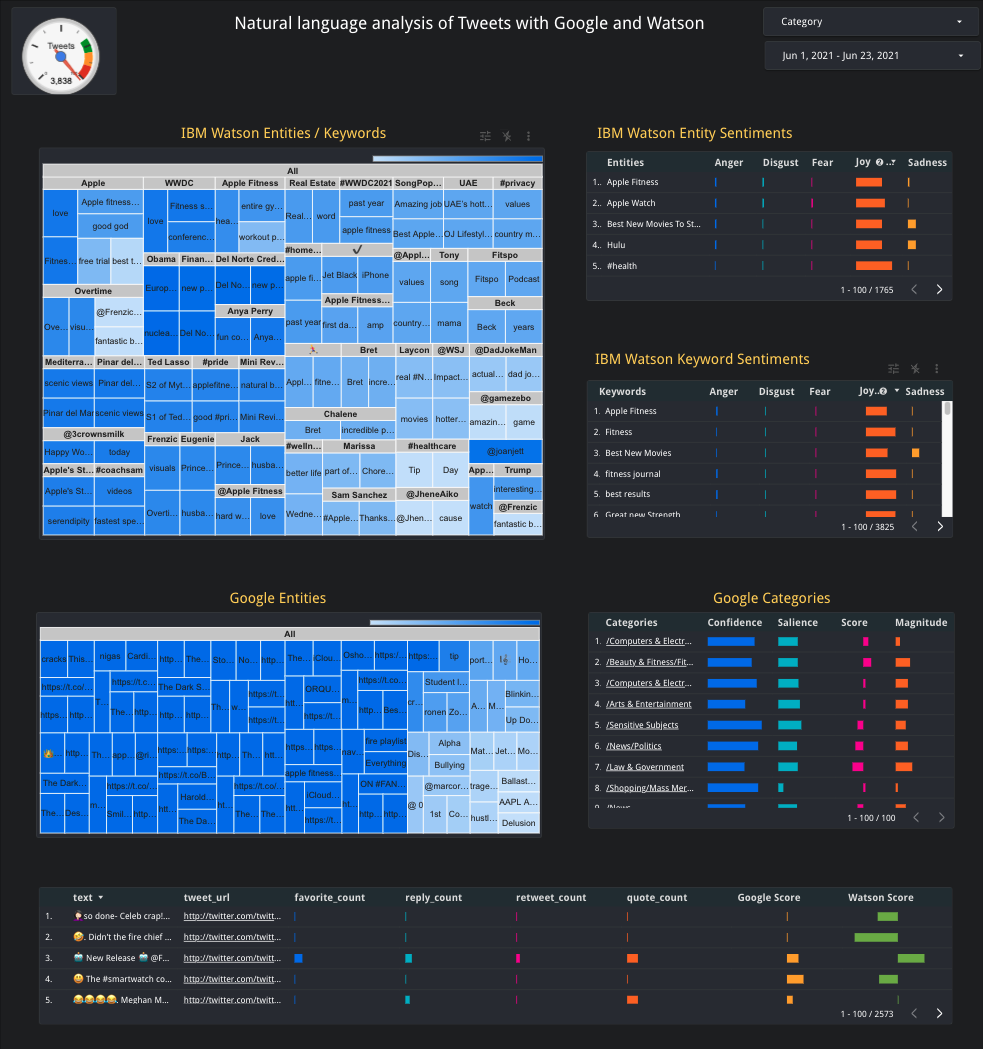

Filter Tweets with Google and Watson natural language

Natural language services like Watson and Google provide additional dimensions to filter the Tweets based on sentiments, emotions, entities and keywords. A score (sentiment score, based on salience or relevance) is presented for each of these metrics, which can be leveraged for filtering. Below are examples of natural language analysis with Watson and Google.