Tutorials / How to's

How to store streaming Tweets in a Google Sheet

This guide will help developers get started with and learn how to consume the Twitter API filtered stream endpoint, which allows you to retrieve Tweets in real-time, and store them in a Google Sheet for future use.

Note: While this is designed for anyone to use, elements of this tutorial require an understanding of Node.

Relevant Endpoints

Introduction

Twitter APIs provide developers with access to data about what’s happening in the world. They can then use this data to build applications that allow them to tap into current or past conversations.

This allows you to imagine and reimagine how the world can consume the data provided by these Tweets, and come up with various ways to understand, serve and enhance the public conversation.

The filtered stream endpoint provides a way for developers to apply rules that filter Tweets in real-time. Using this endpoint, you can receive a constant stream of Tweets that match rules you define for the stream and/or your business requirements.

However, it is not always easy to handle large volumes of Tweets, especially in cases when human interaction or intervention is required to process and attend to them. For example, you may want to retrieve all Tweets that mention an event or a trending hashtag in order to analyze them for sponsorship opportunities.

In such cases, you might want to store the Tweets for later use or peruse, like in a Google Sheet.

The guide will lead you through the following steps:

- Create a rule to filter the stream

- Consume the filtered stream endpoint

- Create a Google Sheet to use as the Tweet store

- Store incoming Tweets in the Google Sheet

Where to find the code

The code used in this guide can be found on GitHub. It is a Node Typescript project.

The README.md file contains instructions for setting this up on your local machine.

Prerequisites

- Twitter Developer Account:

To run this code, you must have a Twitter developer account.

Once you have an approved developer account, create a Project and an App inside it. You will then generate a Bearer Token from the App keys and tokens section, which is required for this guide. - Google Sheets API credentials and a Google Spreadsheet to write to.

Follow Step One in this guide to enable the Google API, create API credentials with access to a Google Spreadsheet, and download a key file (take care to download it in JSON format).

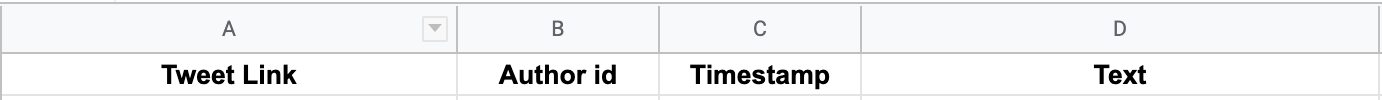

Note: You’ll need to set a header row inside the spreadsheet. In this guide, you’ll extract some data from the Tweet stream, so you’ll need to set up the header line like the below:

Let's begin!

Step one: Install the code and configure your environment

If you want to work with the code on your computer, clone the GitHub repository and follow the instructions in the README.md file to set it up.

Note: You’ll need to have Node installed to be able to use this project.

Next, you’ll need to define the following environment variables. You can use your shell or create a .env file in the root directory of the project and add the following into it, making sure to not include the angle brackets themselves.

BEARER_TOKEN=<Bearer Token>

TW_TERM=<A keyword you are interested in>

TW_HASHTAG=<A hashtag you are interested in>

TW_GOOGLE_DOC_ID=<The id of the Google Sheet to store the tweets in>

The code creates a filter rule that uses TW_TERM and TW_HASHTAG to filter the stream. It creates a rule to capture only Tweets that have the keyword or the hashtag variables in them. So for example, if you entered the following...

TW_TERM=Tennis

TW_HASHTAG=Wimbledon

By entering this keyword and hashtag into the environmental variable, you will be adding the following rule to your stream: Tennis OR #Wimbledon

The Google Sheet id is the last part of the Sheet’s URL:

https://docs.google.com/spreadsheets/d/xxxxxxx

You will also need to store the JSON key file you downloaded from Google in the root directory of the project. Make sure it is named client_secret.json.

Once you have done this, you can run the code and it should work - depending on the search term and hashtag you set, Tweets should start appearing in your spreadsheet.

If the code doesn’t work, it is most likely that something was misconfigured. For example - the TW_TERM or TW_HASHTAG were not defined in the environment.

If that happens, your first step is to check the console output,where the code logs notify you of any such issues.

If Tweets do not appear in your spreadsheet,it might be that your filter is too restrictive. Use the details in the walkthrough below to fine-tune it.

The remainder of this guide walks you through the code to explain what it does and how you can use these concepts in your own code.

Step two: Walkthrough the code

import dotenv from 'dotenv'

dotenv.config()

import { GoogleSpreadsheet, GoogleSpreadsheetWorksheet } from 'google-spreadsheet'

import needle from 'needle'

// Setup access to Twitter and Google APIs using env variables

const token = process.env.TW_BEARER_TOKEN

if (!token) {

terminate(

'Config mismatch. Expected TW_BEARER_TOKEN environment variable to contain a Twitter API token. Found undefined',

)

}

const GOOGLE_CREDENTIALS = require('../client_secret.json')

Lines 1-2: Use the dotenv package to easily load variables from .env (Note: You only need to do this if you choose to define your environment variables in a file).

Lines 3-4: Import the Google Sheets objects and the needle package for http streaming.

Lines 7-12: Ensure you have a Twitter Bearer Token configured. The program will not be able to access the Twitter API without it and will return an error if it can’t find it.

Line 14: Load the Google API credentials from the key file.

// #1

const STREAM_API_URL = 'https://api.twitter.com/2/tweets/search/stream'

// #2

const RULES_API_URL = `${STREAM_API_URL}/rules`

// #3

const auth_headers = {

headers: {

Authorization: `Bearer ${token}`,

'Content-type': 'application/json',

},

timeout: 20000,

}

Comments in code:

#1: This is the Twitter API v2 filtered stream endpoint.

#2: You use this endpoint to create the rule to filter the stream for the Tweets you are interested in.

#3: Every call to the API requires the Bearer authorization header with the token. (You got the token in line 7).

The main program

The code uses an IIFE (Immediately Invoked Function Expression) that deals with all the different pieces of the puzzle (the various functions are discussed in the following section)

// Start the program

(async () => {

// #4

await setupRules()

// #5

const doc = await getGoogleDoc()

if (!doc) {

terminate('Could not connect to Google Document')

return

}

const sheet = doc.sheetsByIndex[0]

// #6

handleTweets(sheet)

})()

#4: Set up the rules required to filter the stream so that the program only receives the Tweets you are interested in.

#5: Connect to the Google Spreadsheet you’ll use to store the Tweets.

#6: Start streaming the Tweets and storing them into the spreadsheet.

Function discussion

Set up rules

// Sets up a rule based filter on the search endpoint.

// It uses a company name and a hashtag from the environment and adds a rule to the search.

async function setupRules() {

// #7

const search_term = process.env.TW_TERM

if (!search_term) {

terminate('Config mismatch. Expecting TW_TERM environment variable to contain name of company')

}

const search_hashtag = process.env.TW_HASHTAG

if (!search_hashtag) {

terminate(

'Config mismatch. Expecting TW_HASHTAG environment variable to contain a campaign hashtag',

)

}

// #8

const filter_rule = `(${search_term} OR #${search_hashtag}) has:links -is:retweet`

// Only add this filter rule if it is not already defined.

// #9

return needle('get', RULES_API_URL, {}, auth_headers).then(async (res) => {

// #10

if (

res.body &&

(!res.body.data || !res.body.data.some((rule: Rule) => rule.value === filter_rule))

) {

// #11

await addRule(filter_rule)

}

console.log(

'\x1b[36m%s\x1b[0m',

`Setting filter to search for original tweets containing ${search_term} or #${search_hashtag} with links`,

)

})

}

In step one, you defined the TW_TERM and TW_HASHTAG environment variables to hold a search term and a hashtag value to use in the filter.

The code in the setupRules() function uses these to configure the rule (or filter) you are going to use for the stream endpoint.

This function is async, as it uses promises to make http calls, which have to complete before the program can start listening on the stream.

#7: Ensure that the TW_TERM and TW_HASHTAG variables exist.

#8: Construct the filter rule to use.

The rule constructed here will filter the stream for Tweets that contain either TW_TERM or the TW_HASHTAG as a hashtag, as well as links. It will also filter out any Retweets to remove consumption of the same information.

If you supplied the following values in your environment variables:

TW_TERM=Tennis

TW_HASHTAG=Wimbledon

The resulting filter rule shall be:

(Tennis OR #Wimbledon) has:links -is:retweet

| Statement | Will only return Tweets that |

|---|---|

| (Tennis OR #Wimbledon) | Mention Tennis or #Wimbledon |

| has:links | Contain links, QT, and Tweets with media |

| -is:retweet | Are not Retweets (a - sign negates the is:retweet statement |

Note: The way the filter rule is constructed here serves for demonstration purposes only. As you explore the code, it would be good to change the rule in line 38 to any valid value. You don’t have to use the default construct provided here.

#9: The program makes a GET call to the rules endpoint to retrieve the set of currently active rules applied to the stream.

The call to needle returns a promise, and the chained then() statement handles the result of that promise,which is the result of the GET call itself.

#10: The code checks that the result is well formed (for brevity, there is no error handling code shown). If it is, the code checks the set of rules returned to see if the rule defined already exists using the some statement:

!res.body.data.some((rule: Rule) => rule.value === filter_rule))

Which iterates over the rules returned (under the data key) and tests each one’s value against the rule constructed in comment #8.

#11: If there are no current filtering rules applied to the stream (in other words, the response did not contain a data key at all) - or no existing rule matches the filter - then the code calls the addRule() function (described in the next section). Note that the code uses await to ensure the function completes before moving on.

For more examples on how you can build rules, see the documentation on building rules.

Adding a rule to the stream

// Helper function to add a rule to the stream

async function addRule(filter_rule: string) {

// #12

const filter = {

add: [

{

value: filter_rule,

},

],

}

// #13

return needle('post', RULES_API_URL, filter, auth_headers)

.then().catch((err) => terminate(err.message))

}

#12: Construct the request parameters. This follows the syntax described in the rules endpoint API reference page.

#13: Makes a POST call to the rules url to add the filtering rule to the stream. Note that this call again uses the Promise mechanism. The code uses an empty then() to specify that nothing needs to be done if the call is successful.

terminate() is a helper function to log an error and exit. It can be found in lines 99-102 of the code repo.

Connect to the Google Spreadsheet

// #14

async function getGoogleDoc() {

if (!process.env.TW_GOOGLE_DOC_ID) {

terminate(

'Config mismatch. Expecting TW_GOOGLE_DOC_ID environment variable to contain a Google Spreadsheet id. Found undefined',

)

return

}

// #15

const doc = new GoogleSpreadsheet(process.env.TW_GOOGLE_DOC_ID)

await doc.useServiceAccountAuth(GOOGLE_CREDENTIALS)

await doc.loadInfo()

return doc

}

#14: Ensure that the TW_GOOGLE_DOC_ID environment variable contains an id.

#15: Connect to the Google Spreadsheet document with the id provided in TW_GOOGLE_DOC_ID. Note the use of GOOGLE_CREDENTIALS (loaded in line 14).

Handle the Tweets stream

// Listen to a stream of tweets from Twitter's API

function handleTweets(sheet: GoogleSpreadsheetWorksheet) {

// Open a stream with the tweet data we are interested in

// #16

const extraFields = 'tweet.fields=created_at&expansions=author_id'

// #17

const stream = needle.get(`${STREAM_API_URL}?${extraFields}`, auth_headers)

// As tweets stream in - transform and store them

// #18

stream

.on('data', (data) => storeTweetInSheet(sheet, data))

.on('err', (error) => {

terminate(error.code)

})

return stream

}

This function takes an instance of GoogleSpreadSheetWorksheet as a parameter. It uses it to store Tweet data in.

#16: Defines extra fields the program is interested in.

When connected to the stream, your program will receive the default Tweet object fields in your response: id and text.

To receive additional fields beyond id and text, you need to specify those fields in your request with the field and/or expansion parameters.

To receive a Tweet’s creation time and information about the author within the Tweet object, you will need to request two different sets of fields:

- The additional tweet.created_at field in the primary Tweet objects.

- The default fields on the author’s user object: id, name, and username.

To retrieve the additional fields in the Tweet object, the code adds the following query parameters to your request:

| Key | Value | Returned fields |

|---|---|---|

| tweet.fields | created_at | tweets.created_at |

| expansions | author_id | includes.users.id includes.users.name includes.users.username |

#17: needle module’s get function returns a stream, which is exactly what you need here. #18: Define the behavior expected of the stream. In this case,the storeTweetInSheet() function is called for every Tweet received. In case of an error, the program will just terminate.

Store a Tweet in the Google Spreadsheet

// Transform a tweet's data from the stream into a Google Spreadsheet row

// Add the transformed data as a new row in the sheet

// eslint-disable-next-line @typescript-eslint/no-explicit-any

function storeTweetInSheet(sheet: GoogleSpreadsheetWorksheet, data: any) {

if (!data) return

try {

// #19

const json = JSON.parse(data)

const { id, created_at, text } = json.data

const handle = json.includes.users[0].username

// #20

sheet.addRow([`https://twitter.com/${handle}/status/${id}`, handle, created_at, text])

} catch (err) {

// No need to do anything

}

}

The stream you opened in the handleTweets() function passes chunks of data into the storeTweetsInSheet() function.

#19: Parse the chunk received from the stream. The data that’s going into the Google Spreadsheet is a link to the Tweet (created under comment #20 using the Tweet id and the handle of the user), the handle of the user, the Tweet creation timestamp, and the Tweet text itself.

#20: Add a row to the Google Spreadsheet, with all the data extracted from the Tweet object. (Please note the Google Sheets API rate limits as discussed here: Google Sheets limit)

Ready to build your solution?